CLIP Interrogator

Description

Key Applications

- Generate detailed textual descriptions of images for AI art prompts

- Analyze and label large image datasets efficiently

- Assist in content creation, design, and creative workflows

- Improve accessibility through image captioning

Who It’s For

Pros & Cons

How It Compares

- Versus Manual Captioning: Automates and accelerates image description generation.

- Versus Basic Image Recognition Tools: Provides rich descriptive context, not just labels.

- Versus Other AI Prompt Tools: Focused on detailed and accurate image-to-text conversion.

Bullet Point Features

- AI-powered image analysis and captioning

- Generates detailed prompts for AI image generation

- Supports batch processing of images

- Provides context-aware textual descriptions

- Integrates with creative and research workflows

Frequently Asked Questions

Find quick answers about this tool’s features, usage ,Compares, and support to get started with confidence.

CLIP Interrogator is an AI‑powered image analysis tool that uses the CLIP (Contrastive Language–Image Pre‑training) model to examine an image and generate detailed text descriptions or prompts based on what it “sees.” This bridges visual content and natural language by interpreting objects, styles, and visual elements in the image and converting them into text you can use for analysis or creative tasks.

CLIP Interrogator is especially useful for prompt engineering — it turns an existing image into a rich text prompt that can be used with AI image generation tools like Stable Diffusion or MidJourney. By analyzing the image and suggesting relevant descriptive terms, styles, and keywords, it gives creators a powerful starting point for recreating or expanding on visual concepts.

The tool typically combines two models: BLIP, which generates a base caption describing the image content, and CLIP, which refines that description by matching it against a broad vocabulary of related phrases, styles, and descriptors. This layered process results in detailed, semantically rich text that captures both content and context.

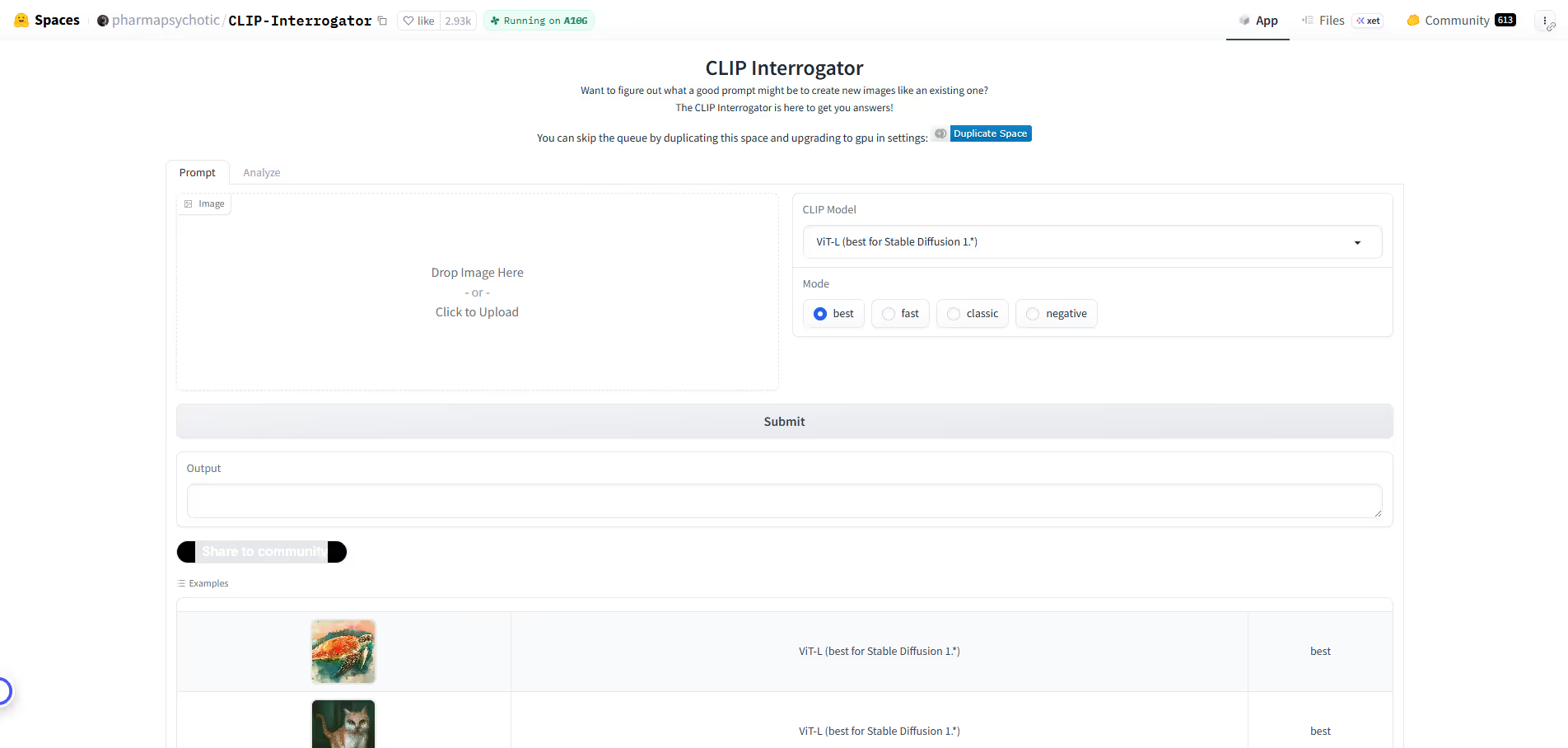

No — you can use web‑based instances of CLIP Interrogator without coding. Many implementations are available on platforms like Hugging Face as interactive applications where you simply upload an image and receive descriptive text or prompts. Advanced users can also run it locally through Python or integrate it into custom workflows.

CLIP Interrogator is ideal for AI artists, prompt engineers, and creative professionals who work with image generation tools and need descriptive prompts fast. It’s also useful for researchers and developers exploring image‑to‑text models or building systems that require meaningful text descriptions based on visual data.

.avif)