Pinecone

Description

Key Applications

- Semantic Search: Power search engines with high accuracy by leveraging vector embeddings for similarity matching.

- Recommendation Engines: Build personalized recommendation systems based on user data and preferences.

- AI-Powered Content Personalization: Deliver tailored content, products, or services to users based on real-time analysis.

- Anomaly Detection: Identify unusual patterns and outliers in data to optimize processes and systems.

Who It’s For

Pros & Cons

How It Compares

- Versus Elasticsearch: Pinecone excels in handling vector-based search with high accuracy and real-time performance, while Elasticsearch focuses more on traditional keyword-based search.

- Versus FAISS: Unlike FAISS, Pinecone is a fully managed solution that takes care of infrastructure, scaling, and maintenance.

- Versus Weaviate: Pinecone offers better scalability and faster indexing, making it more suitable for large-scale, real-time AI applications.

Bullet Point Features

- Fully managed vector database for high-performance search and recommendations

- Real-time indexing and retrieval capabilities

- Scalable infrastructure for large datasets and applications

- Easy-to-use API for seamless integration with AI-driven applications

- Compatibility with popular machine learning frameworks and tools

Frequently Asked Questions

Find quick answers about this tool’s features, usage ,Compares, and support to get started with confidence.

Pinecone is a vector database and similarity search platform purpose-built for AI applications that need to handle large volumes of unstructured data like text, images, or embeddings. Instead of storing traditional rows and columns, Pinecone stores vector representations generated by AI models, allowing developers to build applications that find semantically similar items, perform recommendation tasks, and power retrieval-augmented generation (RAG) workflows faster and more efficiently than using a regular database.

When modern AI models convert content like text or images into numerical vectors, those vectors capture semantic meaning beyond keywords. Pinecone lets developers index and query those vectors with high performance, returning content that is most “similar” in meaning, not just keyword matches. This makes it ideal for tasks like retrieving relevant documents for a chatbot, finding similar product descriptions, or clustering customer feedback — all at production scale.

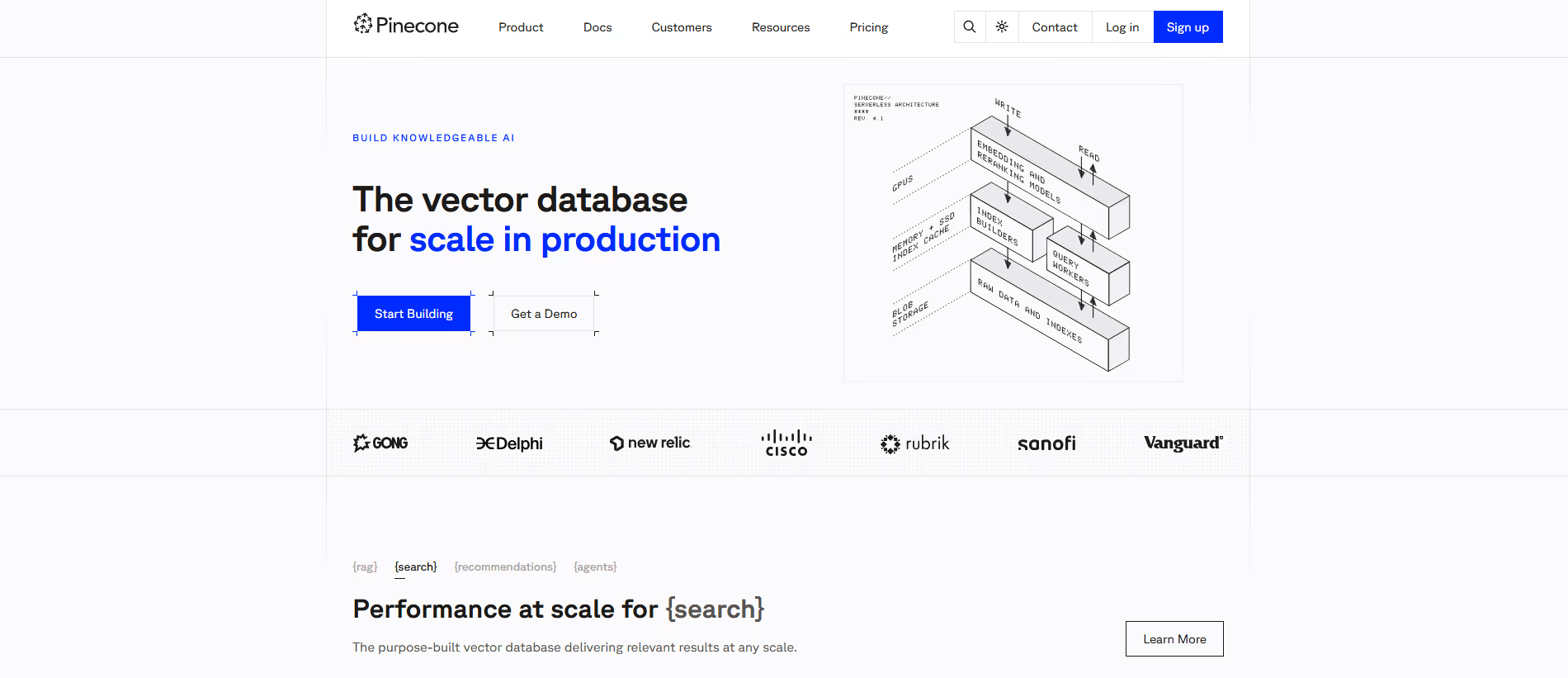

Pinecone provides a hosted vector database with features such as scalable indexing and querying, low latency retrieval, automatic replication and fault tolerance, and namespace isolation for multi-tenant apps. It supports multiple distance metrics (like cosine, dot product, Euclidean), hybrid search with metadata filtering, and seamless integrations with popular embedding model providers (e.g., OpenAI, Cohere, Hugging Face), making it easy to plug into your AI stack.

Yes — Pinecone is built for production use with enterprise-grade performance, including horizontal scaling, access control, and service level agreements (SLAs). Teams can start with smaller deployments during development and scale up without major changes to application logic as data size and query demand grow. This reliability and manageability make Pinecone suitable for startups and large enterprises alike.

Pinecone is ideal for AI developers, data teams, ML engineers, and product teams building applications that rely on fast semantic search, recommendations, or contextual retrieval. Users can expect dramatically better search relevance, faster response times than traditional databases, and easier implementation of RAG, personalization, and recommendation systems without the heavy lifting of building and managing vector infrastructure from scratch.

.avif)